Introduction: The Art of Text Classification and Its Importance in Data Science

Text classification is a vital task in natural language processing (NLP) and machine learning, where the goal is to assign predefined categories, or “labels,” to a given text based on its content. With the exponential growth of text data from sources such as social media, online reviews, and customer feedback, text classification has become an essential tool for sentiment analysis, spam detection, document organization, and more. In this comprehensive article, we will explore six essential practices for enhancing the performance of your text classification models, ensuring accuracy, efficiency, and effectiveness in your NLP projects.

1. Preprocessing and Cleaning Text Data

The quality of your text data plays a significant role in the performance of your text classification model. Raw text data often contains inconsistencies, irrelevant information, and noise, which can hinder the model’s ability to accurately classify the text. By preprocessing and cleaning the text data, you can improve the model’s performance and streamline the training process.

1.1 Tokenization

Tokenization is the process of breaking down the text into individual words or tokens. This step is crucial for creating a structured representation of the text data, which can then be used for feature extraction and model training.

1.2 Lowercasing

Converting all text to lowercase can help reduce the dimensionality of the data and ensure consistency in the text representation. This step can be particularly beneficial for models that are sensitive to the case of the input text.

1.3 Stopword Removal

Stopwords are common words, such as “the,” “and,” and “is,” which often do not carry meaningful information for text classification tasks. Removing stopwords can help reduce noise in the data and improve the efficiency of the model.

1.4 Stemming and Lemmatization

Stemming and lemmatization are techniques used to reduce words to their base or root form. This process can help consolidate similar words, reducing the dimensionality of the data and improving the model’s performance.

2. Feature Extraction and Representation

Once the text data has been preprocessed and cleaned, the next step is to extract meaningful features and represent the text in a format that can be used for model training. Common methods for feature extraction and representation in text classification include:

2.1 Bag of Words

The Bag of Words (BoW) representation is a simple and widely used method for converting text data into numerical features. BoW represents a document as a vector of word frequencies, disregarding the order of words but maintaining information about their occurrence.

2.2 Term Frequency-Inverse Document Frequency (TF-IDF)

TF-IDF is a more advanced method for feature extraction, which takes into account not only the frequency of a word in a document but also its importance in the entire dataset. By giving higher weight to words that are unique to a particular document, TF-IDF can improve the discriminative power of the model.

2.3 Word Embeddings

Word embeddings, such as Word2Vec and GloVe, are dense vector representations of words that capture their semantic meaning in a continuous space. Word embeddings can significantly improve the performance of text classification models, as they allow for a more nuanced understanding of the text.

3. Selecting the Right Model

The choice of model for your text classification task can greatly impact the performance of the system. There are various models available, ranging from traditional machine learning algorithms, such as Naive Bayes, Support Vector Machines, and Decision Trees, to more advanced deep learning techniques, such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs). It is essential to evaluate the performance of different models on your specific task and select the one that best suits your needs and data characteristics.

3.1 Traditional Machine Learning Models

Traditional machine learning models, such as Naive Bayes, Support Vector Machines, and Decision Trees, can be effective for text classification tasks with relatively small datasets and limited computational resources. These models often require less training time and can be easily interpretable, making them suitable for a wide range of applications.

3.2 Deep Learning Models

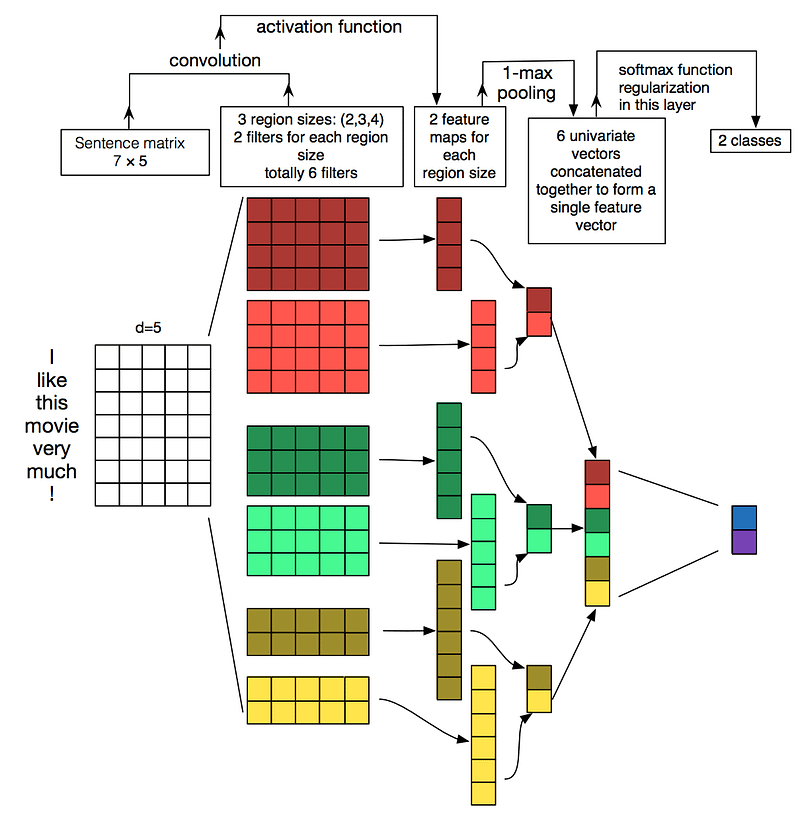

Deep learning models, such as Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), have demonstrated superior performance in various text classification tasks. These models can automatically learn complex features and representations from the text data, resulting in more accurate and robust classification. However, deep learning models typically require larger datasets and more computational resources for training.

4. Handling Imbalanced Data

Imbalanced data is a common challenge in text classification tasks, where some categories have significantly fewer examples than others. Imbalanced datasets can lead to poor model performance, as the model may become biased towards the majority class. To address this issue, consider using techniques such as:

4.1 Resampling

Resampling involves either oversampling the minority class, undersampling the majority class, or a combination of both, to create a balanced dataset. This can help ensure that the model is trained equally on all categories, reducing the impact of class imbalance.

4.2 Weighted Loss Function

Incorporating a weighted loss function in your model can help penalize misclassifications of the minority class more heavily, encouraging the model to pay more attention to underrepresented categories.

5. Model Evaluation and Hyperparameter Tuning

Properly evaluating your text classification model’s performance and tuning its hyperparameters are essential steps for achieving optimal results. Consider the following best practices:

5.1 Cross-Validation

Cross-validation is a technique used to assess the performance of a model by dividing the dataset into multiple folds and training and testing the model on different subsets of the data. This process helps provide a more accurate estimate of the model’s performance and can help prevent overfitting.

5.2 Hyperparameter Tuning

Hyperparameter tuning involves adjusting the settings of your model to optimize its performance. Common hyperparameters in text classification models include the learning rate, the number of hidden layers, and the size of the embedding space. Use techniques such as grid search or random search to systematically explore different combinations of hyperparameters and find the optimal configuration for your model.

6. Continuous Model Improvement

As with any machine learning project, it is essential to continuously improve your text classification model to maintain its performance and adapt to changing data and requirements.

6.1 Regular Model Updates

Regularly update your model with new training data to ensure that it remains current and relevant. This can help prevent model degradation and ensure that the model continues to perform well as new text data becomes available.

6.2 Model Monitoring and Evaluation

Monitor the performance of your deployed text classification model to identify potential issues and areas for improvement. By regularly evaluating your model and analyzing its predictions, you can identify trends and patterns that may require further investigation or model adjustments.

Summary

By following the six essential practices outlined in this comprehensive article, you can significantly enhance the performance of your text classification models and achieve superior results in your NLP projects. From preprocessing and cleaning text data to selecting the right model, handling imbalanced data, and continuously improving your model, these practices can help you unlock the full potential of text classification and harness the wealth of information contained in text data.

Find more … …

Machine Learning for Beginners in Python: How to Handle Imbalanced Classes In Logistic Regression