In the rapidly expanding field of Natural Language Processing (NLP), Word2Vec has emerged as a crucial algorithm, revolutionizing the way we represent and work with text data. It has allowed computers to understand and interpret human language with unprecedented accuracy. This comprehensive guide will delve into the fundamentals of Word2Vec, its operations, benefits, use-cases, and its role in shaping the future of NLP.

Word2Vec: An Introduction

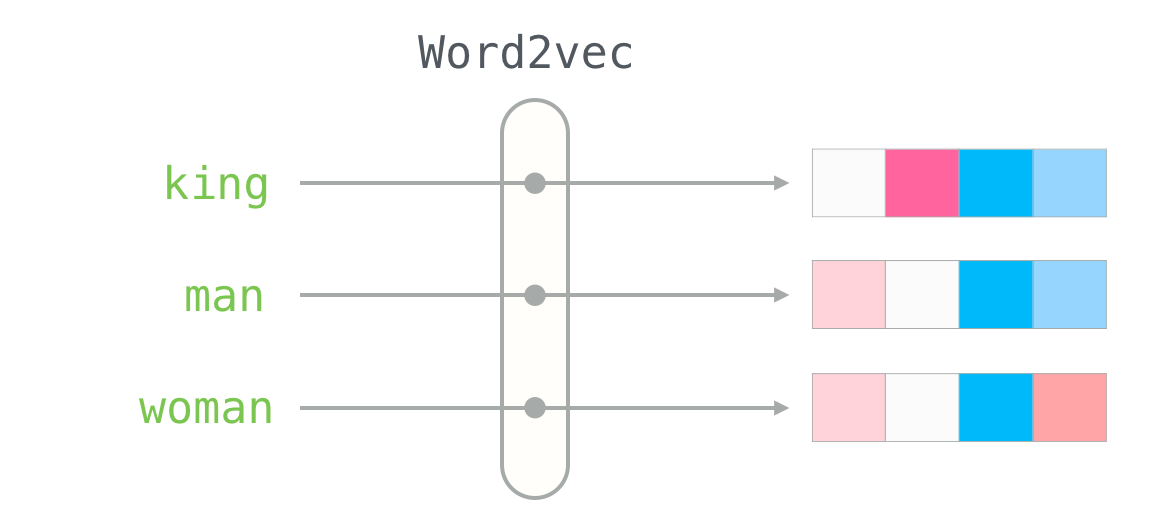

Word2Vec is an innovative algorithm developed by researchers at Google. It is designed to map words or phrases from a vocabulary to vectors of real numbers, thus enabling computers to understand the meaning and context of words. This ability to map words into a continuous vector space preserves semantic relationships between words, a significant leap from traditional bag-of-words models.

How Word2Vec Works

Word2Vec operates by using either of the two model architectures: Continuous Bag-of-Words (CBOW) or Skip-Gram. Both architectures are based on the idea that the meaning of a word can be inferred by the context it appears in.

1. CBOW: The CBOW model predicts a word given its context. The context is defined as a window of surrounding words. For instance, in the sentence, “The cat sat on the mat,” for the target word ‘sat’, the context words could be ‘The’, ‘cat’, ‘on’, ‘the’, and ‘mat’.

2. Skip-Gram: The Skip-Gram model reverses the way CBOW works — it predicts the context words from a given target word. Using the previous example, given ‘sat’, the model will aim to predict ‘The’, ‘cat’, ‘on’, ‘the’, and ‘mat’.

Word2Vec uses neural networks to train these models. Post-training, similar words are located close to each other in the vector space, preserving the semantic relationships.

Advantages of Word2Vec

Word2Vec revolutionized NLP by offering several significant benefits:

1. Semantic Understanding: Word2Vec captures semantic meaning and relationships between words, enabling models to understand synonyms, antonyms, and even analogies.

2. Dimensionality Reduction: By representing words as vectors, Word2Vec drastically reduces dimensionality compared to traditional representations like one-hot encoding, improving model efficiency.

3. Efficient Training: Word2Vec’s neural network-based training is highly efficient and can handle large datasets with millions of words.

Use Cases of Word2Vec

Word2Vec finds application in numerous NLP tasks:

1. Sentiment Analysis: By understanding the semantic meaning of words, Word2Vec greatly improves the accuracy of sentiment analysis, which is crucial for tasks like analyzing customer reviews or social media monitoring.

2. Document Classification: Word2Vec can be used to classify documents based on their content by understanding the context and meaning of the words used in them.

3. Information Retrieval: Search engines can utilize Word2Vec to enhance their understanding of query terms, thereby improving the relevance of the search results.

4. Machine Translation: Word2Vec aids in translating from one language to another by mapping words into a shared vector space, thus preserving the semantic meaning across languages.

Challenges and Limitations

Despite its many advantages, Word2Vec has limitations. It does not handle out-of-vocabulary words well, i.e., words not present in the training corpus. Moreover, Word2Vec does not account for polysemy, where a word has multiple meanings based on context.

Word2Vec and the Future of NLP

As NLP continues to evolve, Word2Vec remains a foundational tool for representing and understanding text. Newer models, such as FastText and BERT, have built upon the principles of Word2Vec, introducing concepts like sub-word information and context-dependent word vectors.

Conclusion

Word2Vec’s ability to map words to vectors while preserving semantic relationships has revolutionized NLP. Its applications span sentiment analysis, document classification, information retrieval, and more, proving its importance in the field. While it has its limitations, the principles of Word2Vec continue to inspire newer, more sophisticated models, highlighting its crucial role in NLP’s future. As we continue to strive for more accurate and nuanced understanding of human language, tools like Word2Vec will remain at the forefront of this exciting journey.

Find more … …

Unlocking the Potential of AI: A Comprehensive Exploration of Prompt Tuning