Introduction: The Significance of Regression Analysis in Data Science and Analytics

Regression analysis is a fundamental statistical technique used to model the relationship between a dependent variable and one or more independent variables. It is widely employed in various fields, including data science, analytics, economics, and social sciences, to understand and predict the behavior of variables, evaluate the impact of interventions, and develop data-driven decision-making strategies. In this comprehensive guide, we will delve into the concept of regression analysis, explore various types of regression techniques, discuss their assumptions and limitations, and provide practical tips for implementing and optimizing regression models to derive meaningful insights from data.

Regression Analysis: An Overview

Regression analysis is a statistical method used to model and analyze the relationship between variables. The main goal of regression analysis is to estimate the values of one variable (dependent variable) based on the values of other variables (independent variables). Some key aspects of regression analysis include:

a. Dependent variable: The variable of interest, also known as the response or target variable, which is being predicted or explained by the independent variables.

b. Independent variables: The variables, also known as predictors or explanatory variables, used to predict or explain the dependent variable.

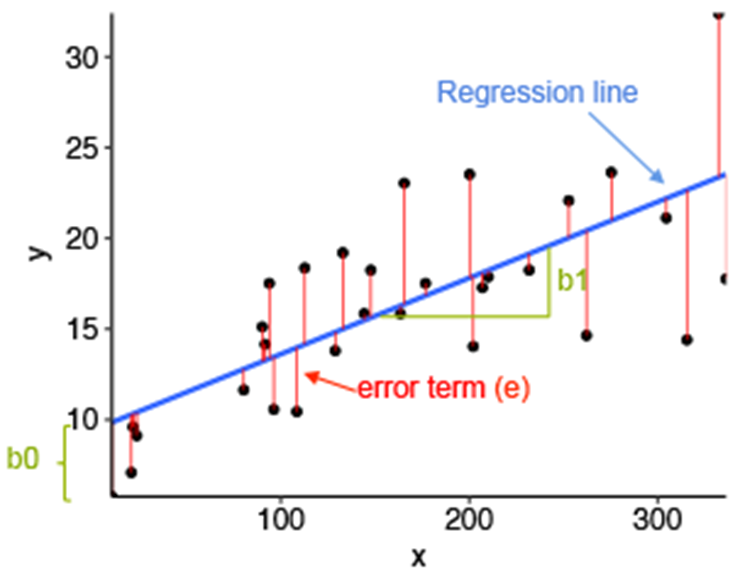

c. Regression model: A mathematical equation that describes the relationship between the dependent and independent variables.

d. Model fitting: The process of estimating the parameters of the regression model to minimize the difference between the observed values of the dependent variable and the predicted values.

Types of Regression Techniques

There are various types of regression techniques, each designed to model different types of relationships between variables:

a. Linear regression: Linear regression models the relationship between the dependent variable and one or more independent variables using a straight line. It is the simplest and most widely used regression technique.

b. Multiple regression: Multiple regression extends linear regression to include multiple independent variables, allowing for more complex relationships between variables.

c. Polynomial regression: Polynomial regression models the relationship between the dependent variable and the independent variables using a polynomial function, allowing for curved relationships.

d. Logistic regression: Logistic regression is used for binary classification problems, modeling the probability of an outcome occurring based on the values of the independent variables.

e. Ridge regression: Ridge regression is a regularization technique for linear regression that addresses multicollinearity by introducing a penalty term to the model.

f. Lasso regression: Lasso regression is another regularization technique for linear regression that enforces sparsity in the model by using an L1 penalty term, effectively performing feature selection.

g. Elastic Net regression: Elastic Net regression combines Ridge and Lasso regression, incorporating both L1 and L2 penalty terms to balance the strengths and weaknesses of the two methods.

Assumptions and Limitations of Regression Techniques

Regression techniques are based on several assumptions that must be met for the results to be valid and reliable:

a. Linearity: The relationship between the dependent variable and independent variables must be linear, or a linear transformation must be applied to achieve linearity.

b. Independence: The observations should be independent of each other, and there should be no multicollinearity among the independent variables.

c. Homoscedasticity: The variance of the error terms should be constant across all levels of the independent variables.

d. Normality: The error terms should be normally distributed.

Violations of these assumptions can lead to biased or inefficient estimates and limit the validity and generalizability of the regression results.

Implementing and Optimizing Regression Models

To implement and optimize regression models effectively, consider the following best practices:

a. Data preprocessing: Clean, preprocess, and transform your data to address missing values, outliers, and non-linearity, ensuring that the assumptions of the regression technique are met.

b. Feature selection: Use techniques such as forward selection, backward elimination, or Lasso regression to identify the most relevant and informative independent variables for your model.

c. Model selection: Choose the most appropriate regression technique for your data and problem, considering factors such as the type of relationship between variables, the number of independent variables, and the distribution of the dependent variable.

d. Model evaluation: Assess the performance of your regression model using relevant metrics, such as R-squared, adjusted R-squared, root mean squared error (RMSE), or mean absolute error (MAE), and compare the results to alternative models or baseline models to ensure that your model is robust and accurate.

e. Model validation: Validate your regression model using techniques such as cross-validation or holdout validation to ensure that it generalizes well to new data and is not overfitting.

f. Regularization and optimization: Apply regularization techniques, such as Ridge, Lasso, or Elastic Net regression, to address multicollinearity or overfitting, and optimize your model using techniques such as gradient descent or grid search to find the best parameter values.

Real-World Applications of Regression Analysis

Regression analysis has been widely applied across various domains and industries to derive insights from data and inform decision-making, including:

a. Finance: Financial analysts and economists use regression analysis to model the relationship between stock prices, market indicators, economic factors, and corporate performance, allowing for informed investment decisions and risk management.

b. Marketing: Marketing professionals leverage regression analysis to understand the impact of advertising and promotional efforts on sales, customer acquisition, and retention, enabling them to optimize their marketing strategies and maximize return on investment.

c. Healthcare: Researchers and practitioners in healthcare employ regression analysis to model the relationship between patient characteristics, treatments, and outcomes, informing diagnosis, prognosis, and treatment planning.

d. Environmental science: Environmental scientists and policymakers utilize regression analysis to study the relationship between environmental factors, such as pollution levels or climate change indicators, and various ecological, social, or economic outcomes, informing environmental management and policy decisions.

Conclusion

Regression analysis is a powerful statistical tool that allows data scientists, analysts, and researchers to model the relationship between variables, predict outcomes, and derive valuable insights from data. By understanding the fundamentals of regression analysis, exploring different types of regression techniques, addressing the assumptions and limitations of these methods, and following best practices for implementing and optimizing regression models, professionals across various domains can harness the power of regression analysis to drive data-driven decision-making and generate meaningful insights that contribute to growth and success in their respective fields.

Find more … …

Machine Learning Mastery: Linear Regression (Python Implementation)