In the world of machine learning, ensemble methods play an essential role in enhancing model performance. These techniques involve combining multiple models to achieve better predictive performance than could be obtained from any of the constituent models alone. One of the most powerful ensemble methods is the stack ensemble (or stacking), capable of delivering high-quality predictions in complex scenarios. This article will unpack the concept of stacking, its functioning, its benefits, and how it is shaping the future of machine learning.

Understanding Stack Ensembles

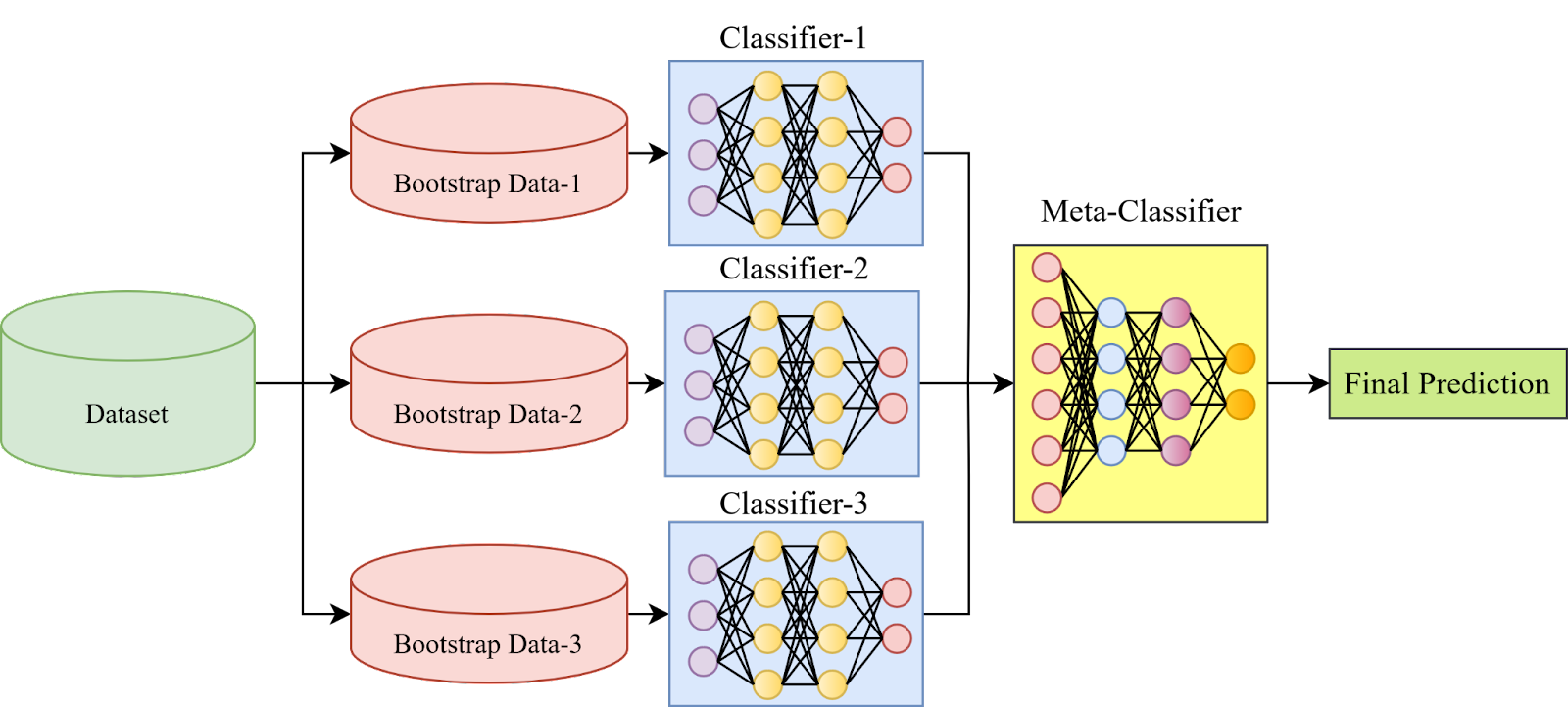

Stacking, also known as stacked generalization, is an ensemble learning technique that combines multiple models in a hierarchical manner to improve prediction accuracy. Rather than using simple functions (like voting or averaging) to combine predictions, stacking trains a meta-model to generate the final prediction based on the individual predictions of each base model.

The idea of stacking involves two key components:

1. Base Models: These are diverse machine learning models that are trained based on the original training set.

2. Meta-model: Also known as the second-level model, it takes the outputs of the base models as inputs and attempts to learn how to best combine these inputs to make a final prediction.

Functioning of Stack Ensembles

The stack ensemble method operates in a structured, two-level process:

1. First Level: Multiple base models are trained independently on the same training dataset. These models can belong to different machine learning algorithms, such as decision trees, support vector machines, or neural networks. They generate their predictions, which are then used as inputs for the second level.

2. Second Level: The meta-model is trained based on the predictions made by the base models. Its job is to find the best way to combine these predictions to produce a final output. This is typically achieved through methods like regression, decision tree, or another machine learning technique.

Benefits of Stack Ensembles

Stacking, as a sophisticated ensemble method, offers several benefits:

1. Increased Accuracy: By learning how to optimally combine predictions from multiple models, stacking often achieves higher accuracy than any individual model.

2. Model Diversity: Stacking allows the integration of various types of models, promoting diversity in the ensemble. This diversity often results in a more robust model that can handle complex predictive tasks.

3. Reduction of Overfitting: Since stacking combines several models, it can often reduce the risk of overfitting compared to using a single, complex model.

4. Handling Non-linearity: Stacking can handle non-linear relationships between predictors and target variables since the meta-model can learn how to best combine predictions based on these relationships.

Challenges and Considerations

While stacking offers significant benefits, it also comes with some challenges. It requires careful setup, and choosing the right base models and the appropriate meta-model is essential. Moreover, it involves a more complex training process than other ensemble methods, and the increased complexity can lead to longer training times.

Additionally, as with any machine learning technique, it’s essential to avoid overfitting when training the meta-model. Using techniques such as cross-validation can help to ensure that the meta-model generalizes well to unseen data.

Stack Ensembles in the Real World

Stack ensembles are used extensively in various domains, including natural language processing, computer vision, and bioinformatics. They’ve also been successful in many Kaggle competitions, where high predictive accuracy is the key to winning.

The Future of Stack Ensembles

As machine learning evolves and computational power continues to grow, stack ensembles are set to become even more prevalent. As these methods are further refined and developed, we can expect to see even more sophisticated stack ensemble models capable of delivering superior predictive performance.

Conclusion

Stack ensembles represent a significant advancement in the field of ensemble methods in machine learning. By combining the predictions of multiple models in a structured, hierarchical manner, they offer increased predictive accuracy and model robustness. While they require careful implementation and come with some challenges, their potential to deliver high-quality predictions makes them a valuable tool in the machine learning toolkit. As the field of machine learning continues to advance, stack ensembles will undoubtedly continue to play a crucial role in driving predictive performance.

Find more … …

Python Data Structure and Algorithm Tutorial – Stack Data Structure