Introduction

Data modeling is a critical component of modern database design and development. It involves the process of creating a visual representation of an organization’s data and its relationships to facilitate the design, implementation, and maintenance of databases. In this comprehensive guide, we’ll explore the various types of data models, their purposes, and the methodologies used to create them, providing you with a solid understanding of data modeling and its importance in database design.

What is Data Modeling?

Data modeling is the process of analyzing an organization’s data requirements and creating a blueprint that represents the structure, relationships, and constraints of the data. This blueprint, known as a data model, serves as a guide for database developers, administrators, and other stakeholders to design, build, and maintain an efficient and effective database system.

Data modeling can be categorized into three primary types: conceptual, logical, and physical. Each type of data model serves a distinct purpose and provides a different level of abstraction, allowing organizations to progressively refine their data models as they move through the database design process.

Conceptual Data Modeling

Conceptual data modeling is the first step in the data modeling process. It provides a high-level, abstract view of an organization’s data and its relationships, focusing on understanding the business requirements and identifying the main entities, attributes, and relationships.

Purpose: Conceptual data models help stakeholders, including business analysts, database developers, and non-technical users, understand the organization’s data requirements and identify the key entities and relationships. This understanding forms the basis for more detailed data modeling in subsequent stages.

Components: The main components of a conceptual data model are entities, attributes, and relationships. Entities represent real-world objects or concepts, such as customers, products, or orders. Attributes describe the properties of these entities, while relationships define how entities are related to one another.

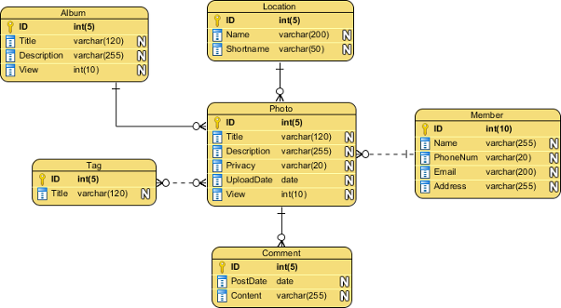

Methodologies: The Entity-Relationship (ER) model is the most widely used methodology for creating conceptual data models. The ER model uses simple graphical symbols, such as rectangles for entities, ovals for attributes, and diamonds for relationships, to visually represent the data structure.

Logical Data Modeling

Logical data modeling builds upon the conceptual data model by providing a more detailed representation of an organization’s data. It defines the data structure, relationships, and constraints without considering the specifics of the underlying database technology.

Purpose: Logical data models serve as a bridge between the high-level, abstract view provided by conceptual data models and the detailed, technology-specific representation provided by physical data models. They allow database developers to refine the data structure and relationships while still focusing on the organization’s data requirements rather than the technical implementation.

Components: In addition to entities, attributes, and relationships, logical data models introduce additional components, such as primary keys, foreign keys, and normalization rules, to ensure data integrity and reduce redundancy.

Methodologies: The ER model can also be used to create logical data models. However, additional notation, such as crow’s foot notation, may be used to represent cardinality and optionality constraints in relationships.

Physical Data Modeling

Physical data modeling is the final stage in the data modeling process, providing a detailed representation of the database’s structure and implementation in a specific database management system (DBMS).

Purpose: Physical data models serve as a blueprint for database developers to create and maintain the actual database. They provide detailed information about the data types, indexing strategies, storage requirements, and other technology-specific considerations needed to optimize the database’s performance, security, and maintainability.

Components: Physical data models include components from the logical data model, as well as additional elements, such as table names, column names, data types, indexes, and constraints, such as primary keys, foreign keys, unique constraints, and check constraints. These components are defined based on the specific database technology being used, ensuring optimal implementation and performance.

Methodologies: Creating physical data models typically involves using data definition language (DDL) scripts, which are specific to the database technology being used. Additionally, database modeling tools, such as ERwin, PowerDesigner, or SQL Developer Data Modeler, can help generate DDL scripts and create physical data models based on the logical data model.

Data Modeling Best Practices

To ensure effective and efficient data modeling, consider the following best practices:

Involve stakeholders: Engage business analysts, database developers, administrators, and end-users throughout the data modeling process to ensure that the data models accurately represent the organization’s data requirements and facilitate effective communication among stakeholders.

Use a consistent naming convention: Adopt a consistent naming convention for entities, attributes, tables, and columns to improve readability and maintainability.

Normalize the data: Apply normalization rules to reduce data redundancy and improve data integrity in the logical and physical data models.

Define and enforce constraints: Use primary keys, foreign keys, and other constraints to enforce data integrity and ensure that the data adheres to the organization’s business rules.

Document the data models: Maintain detailed documentation for the data models, including descriptions of entities, attributes, relationships, and constraints, to facilitate understanding, communication, and maintenance.

Data Modeling Tools

Various data modeling tools are available to assist in creating and maintaining data models. Some popular data modeling tools include:

ERwin Data Modeler: A widely-used data modeling tool that supports conceptual, logical, and physical data modeling, as well as reverse-engineering, code generation, and model validation.

SAP PowerDesigner: A comprehensive data modeling and enterprise architecture tool that supports data modeling, process modeling, and application modeling.

SQL Developer Data Modeler: A free data modeling tool from Oracle that supports logical and physical data modeling for various database technologies, including Oracle Database, Microsoft SQL Server, and IBM DB2.

Microsoft Visio: A diagramming and vector graphics application that can be used for creating conceptual and logical data models using the Entity-Relationship model.

Summary

Data modeling plays a crucial role in database design and development, providing a visual representation of an organization’s data and its relationships. By understanding and mastering the various types of data models — conceptual, logical, and physical — and following best practices, you can create efficient and effective database systems that meet your organization’s needs. Leveraging data modeling tools and staying current with industry trends will further enhance your skills and ensure your success in the rapidly evolving world of database design and development.