Introduction to Data Exploration

Data exploration is a critical initial step in the data analysis process that involves understanding, visualizing, and summarizing the data to gain insights and identify patterns, trends, and anomalies. It helps analysts and data scientists make informed decisions during model building and feature engineering, ultimately improving the performance of machine learning and statistical models. This in-depth guide to data exploration will cover various techniques, visualization methods, and best practices to help you effectively explore and analyze data.

1. Understanding Data Exploration

Data exploration can be broadly categorized into two types: univariate and multivariate analysis. Univariate analysis focuses on analyzing individual variables, while multivariate analysis examines the relationships between multiple variables.

2. Data Exploration Techniques

There are several techniques for data exploration, including:

a. Descriptive Statistics: Summarize the main features of the data using measures such as mean, median, mode, standard deviation, and range.

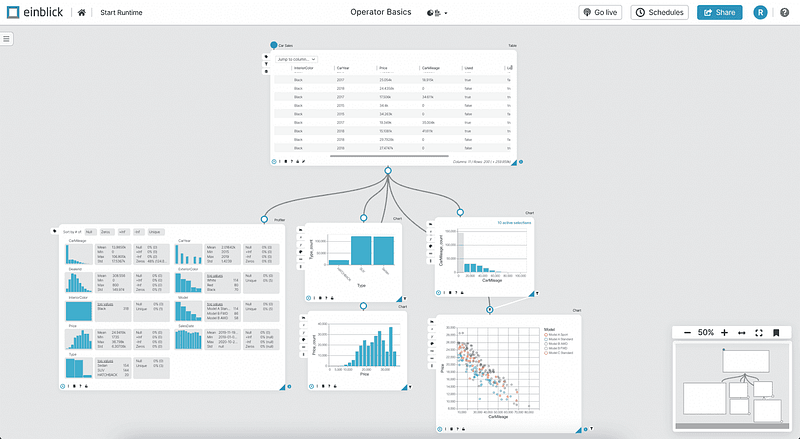

b. Data Visualization: Use graphical representations to display and understand the data, such as histograms, box plots, scatter plots, and heatmaps.

c. Correlation Analysis: Assess the strength and direction of relationships between variables using correlation coefficients, such as Pearson or Spearman.

d. Outlier Detection: Identify unusual data points that deviate significantly from the norm, using techniques like Z-score, IQR, and Tukey fences.

3. Data Visualization Methods

Effective data visualization is essential for successful data exploration. Some popular visualization techniques include:

a. Histograms: Show the distribution of a single continuous variable by grouping data into bins and displaying the frequency of each bin.

b. Box Plots: Display the distribution of a continuous variable by illustrating the median, quartiles, and potential outliers.

c. Scatter Plots: Visualize the relationship between two continuous variables, where each data point represents an observation.

d. Heatmaps: Represent the relationship between multiple variables in a matrix format, using colors to indicate the magnitude of the correlation or association.

e. Bar Charts: Compare categorical variables by displaying the frequency or proportion of each category.

4. Handling Missing Values

Missing values are a common issue in data exploration and can lead to biased estimates and inaccurate analyses. Strategies for handling missing values include:

a. Deletion: Remove observations with missing values from the dataset.

b. Imputation: Fill in missing values using techniques like mean, median, mode, or more advanced methods like k-Nearest Neighbors imputation.

c. Model-Based Methods: Use statistical or machine learning models to estimate missing values based on the relationships between variables.

5. Outlier Detection and Treatment

Outliers can significantly impact the performance of machine learning models and statistical analyses. Techniques for detecting and treating outliers include:

a. Z-score: Calculate the number of standard deviations each data point is from the mean, and consider points with a Z-score above a specific threshold as outliers.

b. IQR: Identify outliers as data points falling below the first quartile minus 1.5 times the IQR or above the third quartile plus 1.5 times the IQR.

c. Winsorizing: Replace extreme values with a specified percentile value, limiting the effect of outliers on the analysis.

6. Feature Engineering

Feature engineering involves creating new variables or modifying existing variables to improve model performance. Techniques for feature engineering include:

a. Feature Scaling: Normalize or standardize variables to ensure they are on the same scale.

b. Feature Transformation: Apply mathematical transformations, such as logarithmic, square root, or power transformations, to modify variable distributions.

c. Feature Selection: Identify the most important variables using techniques like forward selection, backward elimination, or LASSO regularization.

7. Best Practices for Data Exploration

a. Understand the Data: Familiarize yourself with the data’s structure, context, and domain-specific terminology to ensure accurate interpretation and analysis.

b. Clean and Preprocess Data: Address data quality issues, such as missing values, inconsistencies, and outliers, before starting data exploration.

c. Use Appropriate Visualizations: Choose visualization techniques that effectively represent the data and clearly convey the intended insights.

d. Interpret Results Carefully: Consider potential biases, confounding variables, and limitations in your analysis when drawing conclusions.

e. Collaborate with Domain Experts: Engage with subject matter experts to ensure your analysis aligns with domain knowledge and addresses relevant business questions.

f. Iterate and Refine: Data exploration is an iterative process; continually refine your analysis based on new insights and feedback from stakeholders.

8. Applications of Data Exploration

Data exploration is widely used across various industries and domains, including:

a. Finance: Analyze financial data to identify investment opportunities, detect fraud, and optimize portfolio management.

b. Healthcare: Explore medical data to support diagnosis, treatment planning, and public health initiatives.

c. Marketing: Analyze customer data to develop targeted marketing campaigns, segment customers, and optimize pricing strategies.

d. Manufacturing: Examine production data to identify inefficiencies, optimize processes, and improve product quality.

e. Sports: Analyze player performance data to inform coaching decisions, develop game strategies, and support player recruitment.

9. Challenges in Data Exploration

Data exploration can present several challenges, including:

a. Data Quality: Inaccurate, inconsistent, or missing data can lead to misleading or erroneous conclusions.

b. High Dimensionality: Handling datasets with a large number of variables can be computationally expensive and challenging to visualize and interpret.

c. Scalability: Analyzing large datasets can require significant computational resources and specialized tools or techniques.

d. Subjectivity: Data exploration often involves subjective decisions, such as choosing visualization techniques or determining outliers, which can influence the analysis results.

10. Overcoming Data Exploration Challenges

To address these challenges, consider the following approaches:

a. Invest in Data Quality: Implement data validation, cleaning, and preprocessing techniques to ensure the accuracy and consistency of your data.

b. Dimensionality Reduction: Apply techniques like Principal Component Analysis (PCA) or feature selection methods to reduce the complexity of high-dimensional data.

c. Leverage Big Data Technologies: Utilize distributed computing frameworks, such as Apache Spark or Hadoop, and cloud-based platforms to analyze large datasets efficiently.

d. Establish Guidelines and Best Practices: Develop a standardized approach to data exploration, including guidelines for visualization techniques, outlier detection, and feature engineering, to reduce subjectivity and ensure consistency across analyses.

Summary

Data exploration is a vital step in the data analysis process, enabling analysts and data scientists to understand, visualize, and interpret data to support decision-making and model development. By mastering various data exploration techniques, visualization methods, and best practices, you can effectively analyze complex datasets and derive valuable insights to drive business success. As you continue your data exploration journey, remember to stay current with the latest developments and trends in the field, ensuring your analyses remain relevant, accurate, and impactful.