Introduction

In the realm of artificial intelligence, neural networks are among the most powerful tools at our disposal. These systems, inspired by the human brain, use a series of algorithms to recognize patterns and interpret data. One of the critical mechanisms that enable neural networks to learn from input data is called forward propagation. This comprehensive guide will dive deep into the concept of forward propagation, providing a detailed understanding of how this process works and its role in neural networks.

Understanding Neural Networks and Forward Propagation

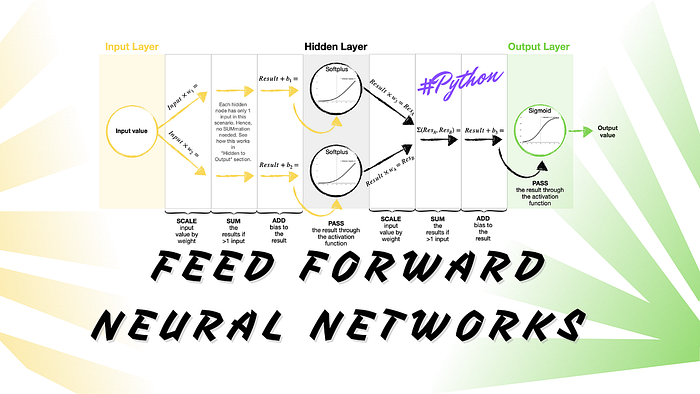

A neural network is a series of algorithms that endeavors to recognize relationships in a set of data through a process that imitates the human brain. These networks consist of interconnected layers of nodes, or “neurons,” which receive input data, process it, and pass it on to the next layer. The final layer of the network produces the output.

Forward propagation is the initial step in the learning process of a neural network. It involves applying a series of weights and biases to the input data and passing the result through an activation function, which then forms the input for the next layer. This process is repeated for each layer of the network until the final layer is reached, producing the output.

The Steps of Forward Propagation

1. Initializing Weights and Biases

Every neuron in a neural network is associated with a weight and a bias. The weight determines the strength or importance of the neuron’s input, while the bias allows the neuron’s output to be adjusted independently of its inputs. Initially, these weights and biases are typically set to random values.

2. Weighted Sum

The first step in forward propagation involves calculating a weighted sum for each neuron in the hidden layer. This is done by multiplying each input by its corresponding weight and then adding them all together along with the bias.

3. Activation Function

After calculating the weighted sum, the result is passed through an activation function. This function, which can take various forms (such as a sigmoid function, ReLU, or tanh), introduces non-linearity into the network, allowing it to learn and represent more complex patterns.

4. Repeat for Each Layer

Steps 2 and 3 are repeated for each subsequent layer in the network, using the output of the previous layer as input for the next.

5. Output Layer

Once the forward propagation process reaches the output layer of the network, the final output is calculated. In the case of a classification problem, this might involve applying a softmax function to generate probabilities for each class.

The Role of Forward Propagation in Learning

The goal of forward propagation is to transform the input data into a form that can be used to make predictions. However, because the weights and biases of the network are initially set to random values, these predictions will not be accurate to start with.

The true learning in a neural network comes from iteratively adjusting the weights and biases based on the error of the network’s predictions, a process known as backpropagation. After forward propagation has been used to generate a prediction, the error of that prediction is calculated and then backpropagated through the network, adjusting the weights and biases as it goes to reduce the error. This cycle of forward propagation and backpropagation is repeated many times, gradually improving the network’s ability to accurately predict the output for given inputs.

Conclusion

Forward propagation forms the foundation for learning in neural networks. It’s a critical process that involves transforming input data through a series of weights, biases, and activation functions, layer by layer, to generate a final output. This output is then used to adjust the weights and biases through backpropagation, improving the network’s predictive accuracy. As you delve deeper into the world of neural networks and machine learning, understanding this fundamental concept will provide a strong foundation for further exploration and learning. This comprehensive guide aims to illuminate the concept of forward propagation, providing you with an essential tool in your machine learning toolkit.

Find more … …

Statistics for Beginners in Excel – Hypothesis Testing for Binomial Distribution

Deep Learning in R with Dropout Layer | Data Science for Beginners | Regression | Tensorflow | Keras